3.3.3 Training Neural Networks

Intro

Neural network training is the process of adjusting a network’s parameters (weights and biases) to minimize a loss function over training data. Conceptually, a neural network is a function

parameterized by , mapping inputs to outputs . Training involves feeding inputs forward through the network, computing a loss (e.g., mean-squared error or cross-entropy) between and the true target , and then updating to reduce this loss. The standard update rule uses Gradient Descent:

where is the learning rate. This process repeats over many epochs, gradually improving the network’s accuracy. Training thus optimizes the cost function to find weights that best fit the data.

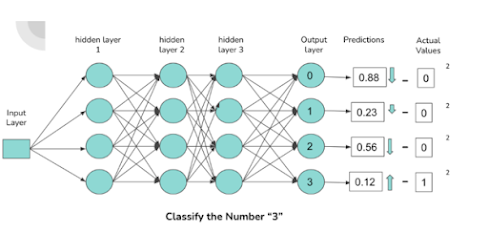

Figure: Illustration of a feedforward neural network with 3 hidden layers classifying the digit “3.” Inputs propagate forward through weighted connections; errors are backpropagated to update weights.

In practice, training proceeds as follows (pseudo-code):

initialize model parameters θ randomly

for epoch = 1 to N_epochs:

for each batch (inputs X, targets Y) in training data:

outputs = forward_pass(model, X)

loss = Loss(outputs, Y)

grads = backpropagate(loss, model) # compute ∂loss/∂θ via backpropagation

θ = θ - η * grads # update parameters (gradient descent step)

Here backpropagation computes the gradient of the loss with respect to each weight using the chain rule. As NVIDIA explains, “Backpropagation is the mechanism by which components that influence the output of a neuron (bias, weights, activations) are iteratively adjusted to reduce the cost function”. Each update moves the parameters opposite to the gradient, aiming for a (local) minimum of the loss. Frameworks like TensorFlow and PyTorch automate this process, abstracting the math but still relying on the same principles.

Batch, Mini-batch and Stochastic Training

Batch Gradient Descent uses the entire training set to compute each update. This yields smooth, stable convergence (directly toward a minimum) but can be very slow and memory-intensive for large datasets. Stochastic Gradient Descent (SGD), by contrast, updates the weights after each individual training example, making updates very fast and noisy (the loss may oscillate). In practice, most training uses Mini-batches: splitting the data into small batches (e.g. 32–256 examples) and computing gradients on each batch. Mini-batch training strikes a balance: it benefits from vectorized GPU computations and smoother convergence than pure SGD, while requiring less memory than full-batch.

Key trade-offs of these strategies include:

-

Convergence Stability: Full-batch gradient descent yields the most accurate gradient but is slow per update. Stochastic updates introduce noise, which can help escape shallow local minima but may also cause oscillations. Mini-batches yield intermediate stability.

-

Speed and Throughput: Smaller batches allow quicker updates and are well-suited to online or streaming data. Large batches exploit GPU parallelism better, but each update is computationally heavier.

-

Memory Usage: Full-batch requires storing the entire dataset in memory. Mini-batch uses far less memory (just the batch and model). SGD has the lowest memory footprint.

A comparison of these approaches is summarized below:

| Training Strategy | Batch Size | Convergence | Speed per Epoch | Memory Use | Typical Use Cases |

|---|---|---|---|---|---|

| Batch GD | Entire dataset | Smooth, stable | Slow (few updates) | High | Small datasets; final tuning |

| Mini-batch GD | 32–256 samples | Balance of stability and noise | Fast (GPU-friendly) | Moderate | Most deep learning training |

| Stochastic GD (SGD) | 1 sample | Noisy, may overshoot | Very fast per update (many updates) | Low | Online learning; streaming |

Optimization Algorithms

Beyond vanilla gradient descent, many algorithms improve training speed and convergence:

-

Momentum (Classical or Nesterov): Keeps an exponentially weighted moving average of past gradients. The update rule is , . Momentum accelerates learning in consistent directions and dampens oscillations, effectively smoothing the descent path.

-

Adaptive Gradient Methods: Algorithms like Adagrad, RMSProp, and Adam adapt the learning rate for each parameter based on past gradients. For example, Adam maintains first () and second () moment estimates of the gradient:

with bias-corrected , then updates . Adam often yields faster convergence and is robust to hyperparameter choices. In practice, algorithms like SGD with momentum and Adam are among the most popular optimizers. Each has trade-offs: e.g., Adam typically converges in fewer epochs, while SGD (especially with momentum) can generalize slightly better on some tasks (requiring potentially more epochs).

Convergence Challenges and Remedies

Training deep networks faces issues like vanishing/exploding gradients, overfitting, and plateaus. For example, in very deep networks, gradients can diminish (sigmoid/tanh units) or blow up (unbounded ReLU without proper init). Modern techniques mitigate these problems:

-

Weight Initialization: Schemes like Xavier/He initialization set initial weights to keep gradient variances stable.

-

Batch Normalization: Inserted between layers to stabilize the input distribution of each layer, allowing higher learning rates.

-

Gradient Clipping: Caps gradients to prevent extreme updates (useful in RNN training).

-

Learning Rate Schedules: Decay learning rate over epochs (step decay, cosine annealing, or warm restarts) to fine-tune convergence.

-

Regularization: Techniques like dropout (randomly disabling neurons) and weight decay prevent overfitting. Dropout also helps reduce co-adaptation of features.

-

Residual Connections: Architectures like ResNets allow very deep networks by providing identity “shortcuts” that mitigate vanishing gradients.

The choice of optimizer, initialization, batch size, and these techniques all influence convergence. For instance, using synchronous distributed training (averaging gradients across GPUs each step) typically yields more stable convergence than asynchronous updates. In any case, monitoring training/validation loss and adjusting strategies (e.g. lowering the learning rate or adding regularization) is essential when convergence issues arise.

Distributed and Accelerated Training

Modern training leverages specialized hardware and distributed systems to accelerate learning:

-

GPUs and TPUs: Training large networks is computationally intensive. Graphics Processing Units (GPUs) accelerate matrix multiplies and parallel operations; for example, NVIDIA A100 GPUs enable tens of TFLOPS of throughput. Google’s Tensor Processing Units (TPUs) are custom accelerators optimized for neural nets, particularly effective for large models and batches. Industry reports show TPUs can achieve much faster training speeds and lower costs than CPUs for recommendation and vision models. However, scaling TPUs beyond a few cores is challenging, and distributed multi-GPU/TPU setups are complex.

-

Data-Parallel Distributed Training: Frameworks like TensorFlow and PyTorch allow a model to be replicated across multiple GPUs or machines. Each replica processes a different subset of data (mini-batch), computes gradients, and then gradients are averaged (synchronous training). TensorFlow’s tf.distribute API (e.g. MirroredStrategy for multi-GPU, TPUStrategy for Cloud TPUs) supports synchronous and asynchronous modes on GPUs/TPUs. PyTorch’s torch.distributed package provides DistributedDataParallel (DDP), which replicates the model across processes and uses fast collective communication (NCCL) to aggregate gradients. These approaches achieve near-linear speedups with more devices, at the cost of more communication overhead and synchronization.

-

Frameworks: High-level frameworks manage training infrastructure details. For instance, TensorFlow (with Keras) offers

Model.fit()and distribution strategies out-of-the-box. PyTorch Lightning is a popular high-level interface for PyTorch that organizes boilerplate into a LightningModule, allowing researchers to focus on model logic. Lightning “removes boilerplate and unlocks scalability,” enabling multi-GPU/TPU training without changing the core model code. In sum, frameworks abstract much of the complexity, but under the hood they implement the same gradient-descent-based training routines.

Use Cases and Industry Examples

Neural network training underpins many real-world applications:

-

Healthcare: Deep learning is revolutionizing medical imaging. For example, Convolutional Neural Networks analyze X-rays, MRIs, and CT scans to detect tumors and anomalies. NVIDIA reports that startups like Subtle Medical use deep learning (deployed via NVIDIA’s Clara platform) to accelerate PET scans and reduce radiation dose; notably, SubtlePET became the first FDA-cleared AI product in nuclear medicine. Deep networks also segment tissues and classify pathology in radiology, dramatically speeding radiologists’ workflows.

-

Finance: Neural networks detect fraud and predict markets. For instance, graph neural networks (GNNs) analyze transaction networks to flag credit card fraud. NVIDIA’s data scientists describe an end-to-end fraud detection system combining GNNs (to model relationships between accounts/devices) with traditional models, achieving higher accuracy and fewer false positives than rule-based systems. Similarly, recurrent and convolutional networks are employed for credit scoring, algorithmic trading, and risk modeling in finance.

-

Autonomous Vehicles: End-to-end deep learning powers self-driving cars. NVIDIA demonstrated that a CNN can learn steering control directly from camera input: a network trained on human driving data mapped raw front-camera images to steering angles. This “direct perception” model worked on roads even without lane markings, showing that internal layers implicitly learned useful features. The image below shows NVIDIA’s prototype car performing such a task.

Figure: NVIDIA’s self-driving car research platform uses an onboard neural network (trained via backpropagation) to map camera inputs to steering commands.

Other sectors use similar training methods: in retail, large recommendation systems are trained on GPUs/TPUs for personalized ads (e.g. Snapchat’s ad-ranking models trained on TPUs); in transportation, neural nets process LIDAR and camera data for obstacle detection; and in robotics, reinforcement learning (which also uses gradient-based policy updates) trains agents for control.

Summary

Training neural networks involves a blend of theory and practice: from foundational gradient-descent algorithms and backpropagation to modern optimizers (Adam, RMSprop), batching strategies, and hardware-accelerated implementations. Convergence requires careful tuning (learning rates, initialization, regularization) and may leverage advanced tricks like normalization and residual connections. Today’s frameworks (TensorFlow, PyTorch, PyTorch Lightning) and hardware (GPUs/TPUs, distributed clusters) have made training powerful deep models more accessible. Across domains – from medical imaging to finance and self-driving cars – these trained networks are driving cutting-edge solutions. A solid grasp of both the underlying math and the practical techniques is essential for effective neural network training.

References

[1] R. Alake, A Data Scientist’s Guide to Gradient Descent and Backpropagation Algorithms, NVIDIA Developer Blog, Feb. 09, 2022.

[2] S. Liu, B. Rees, and P. Patangia, Supercharging Fraud Detection in Financial Services with Graph Neural Networks, NVIDIA Developer Blog, Oct. 28, 2024.

[3] M. Bojarski et al., End-to-End Deep Learning for Self-Driving Cars, NVIDIA Developer Blog, Aug. 17, 2016.

[4] I. Salian, How AI Is Changing Medical Imaging, NVIDIA Blog, Mar. 04, 2019.

[5] Snap Inc. Engineering, Training Large-Scale Recommendation Models with TPUs, Mar. 28, 2022.

[6] PyTorch Lightning Documentation, Lightning in 15 minutes, lightning.ai (2025).

댓글

댓글 쓰기